Table of Contents

- Why “Claude Opus 4.1 vs GPT-5” matters in 2025 for reasoning, speed, and cost

- Claude Opus 4.1 vs GPT-5 in 2025, what changed in reasoning and tool use

- Claude Opus 4.1 vs GPT-5 benchmarks that matter for coding and analysis

- Headline coding scores, vendor reported

- Claude Opus 4.1 vs GPT-5 speed, latency, and efficient thinking in daily use

- Claude Opus 4.1 vs GPT-5 cost and context, real numbers for 2025 budgets

- Claude Opus 4.1 vs GPT-5 safety, governance, and enterprise fit

- Who should pick which model, a practical split for builders and marketers

- Claude Opus 4.1 vs GPT-5, feature and pricing comparison table

- Field notes for our audience, tech, marketing, business

- A note on energy and operational transparency

- The last word before you choose

Why “Claude Opus 4.1 vs GPT-5” matters in 2025 for reasoning, speed, and cost

The last twelve months compressed three product cycles into one, the result is two frontier models that change how small teams ship work and how growth teams scale experiments. GPT-5 arrives with higher scores on practical coding and agentic tool use, plus an aggressive pricing curve, while Claude Opus 4.1 refines Anthropic’s focus on sustained, careful work in long sessions with a conservative safety posture. This article tracks the data that matters, then translates it into an action plan for developers, performance marketers, and operators who value time to answer, cost control, and reliability. We will cite primary sources, we will avoid hype, and we will keep the guidance practical for daily use. [1][2]

Tool use and planning, where GPT-5’s controls and Opus 4.1’s steadiness show up

Claude Opus 4.1 vs GPT-5 in 2025, what changed in reasoning and tool use

OpenAI positions GPT-5 as a unified system that knows when to think longer and when to answer directly, with explicit controls for reasoning effort and verbosity, and with stronger tool calling that chains actions with fewer failures. This is not just a language upgrade, it is a workflow upgrade for agents that plan, call tools, recover from errors, and finish more tasks end to end. [1][2]

Anthropic’s Claude Opus 4.1 is a drop in upgrade over Opus 4 for agentic tasks and real world coding, available in the API and on Bedrock and Vertex, priced identically to Opus 4. The release notes emphasize more rigorous detail tracking in research and analysis, and continued emphasis on reliability during longer task chains. [4][5]

For marketing and business readers, the takeaway is simple, GPT-5 reduces friction when you need a model that proactively executes a plan across tools, Claude Opus 4.1 keeps Anthropic’s reputation for careful, steady output when you run many steps with low tolerance for subtle errors. [4][2]

Benchmarks guide the first choice, real tasks confirm it

Claude Opus 4.1 vs GPT-5 benchmarks that matter for coding and analysis

Benchmarks are not the job, but they signal shape. OpenAI reports GPT-5 at 74.9 percent on SWE-bench Verified and 88 percent on the Aider polyglot code editing eval, with fewer tool calls and fewer tokens to reach comparable quality on hard runs. Anthropic reports Opus 4.1 at 74.5 percent on SWE-bench Verified, a small but real bump over Opus 4, and continued strength in long running refactoring tasks. Treat vendor numbers as vendor numbers, then triangulate with public leaderboards and methodology notes when accuracy is critical. [2][4][7]

Headline coding scores, vendor reported

| Benchmark, scope | GPT-5 | Claude Opus 4.1 | Notes |

|---|---|---|---|

| SWE-bench Verified, real world bug fixing | 74.9% | 74.5% | Vendor reported, evaluation harness and exclusions matter, read footnotes. [2][4] |

| Aider polyglot, code editing diff | 88% | n,a | GPT-5 tops OpenAI’s internal runs, no Opus 4.1 figure published here. [2] |

| Long work, sustained agentic sessions | Strong, fewer tool calls and tokens at similar quality | Strong, emphasizes detail tracking and refactoring upgrades | Qualitative but supported by release notes. [2][4] |

Vendor disclosures and the SWE-bench site itself show that harness choices, tool access, and prompt scaffolding change results, so internal validation remains important. In production, your latency and token burn under tool use often matter more than a single score, which is where GPT-5’s minimal reasoning mode and Claude’s prompt caching become concrete levers. [2][7]

Speed is a thinking budget, not just a round trip

Claude Opus 4.1 vs GPT-5 speed, latency, and efficient thinking in daily use

Latency is not only a server round trip, it is a thinking budget. GPT-5 exposes a reasoning_effort control, you can request minimal effort when you want speed, or higher effort when you want deeper analysis. OpenAI’s own notes describe fewer output tokens and fewer tool calls at the same quality bands, which translates to faster responses and lower unit costs during high volume bursts. For growth teams running many small tests each day, this changes throughput without increasing complexity. [2]

Claude Opus 4.1 does not expose the same named control, however Anthropic’s platform supports prompt caching and Batch API that reduce effective cost and can indirectly improve throughput for repeated contexts and large offline jobs. Tool use token overhead is documented, which helps planners budget latency and cost before launch. In practice, this means stable performance on long analytical tasks and large code edits, with predictable behavior across hours. [6]

If your product relies on many tool calls, both vendors now document the hidden tokens that tool use introduces, so you can tune your schemas and preambles rather than guessing. Developers who treat latency as a design constraint, not an afterthought, will realize the compounding gains here. [2][6]

Claude Opus 4.1 vs GPT-5 cost and context, real numbers for 2025 budgets

OpenAI prices GPT-5 at $1.25 per million input tokens and $10 per million output tokens, with GPT-5 mini at $0.25 in and $2 out, and GPT-5 nano at $0.05 in and $0.40 out, Batch API cuts both sides by half, and caching reduces repeated inputs. For teams that prototype widely and ship often, this pricing feels like a deliberate nudge toward more usage, not less. [3]

Anthropic prices Claude Opus 4.1 at $15 per million input tokens and $75 per million output tokens, with prompt caching and Batch API giving meaningful relief on repeated prompts and overnight processing. Opus 4.1 runs with a 200K token context, while Anthropic’s 1M context is a Sonnet only preview, which keeps Opus sessions squarely in the large but not extreme context class. [6][5]

A planning wrinkle that finance leaders should note, subscription access for coding assistants can be abused by heavy automation, recent reporting surfaced inference whales who generated five figure backend costs on flat price plans, vendors are already tightening rate limits and changing tiers. If your team relies on a subscription tool that wraps these models, read the fine print, and expect usage based billing to win. [10]

Claude Opus 4.1 vs GPT-5 safety, governance, and enterprise fit

GPT-5’s system card and launch notes emphasize reductions in hallucinations, clearer limits under uncertainty, and strict safeguards for sensitive domains, this is critical for regulated teams that must log why an agent took an action and whether it accessed the correct tool. Stronger instruction following lowers the need for complex prompt wrappers, which lowers operational risk. [1]

Anthropic continues to push a conservative safety posture. Recent updates allow Claude to exit persistently abusive conversations, which will be rare in normal business use, but demonstrates a governance stance enterprise buyers understand. This also signals that long running agents need to negotiate user intent safely, not just complete tasks. [11][12]

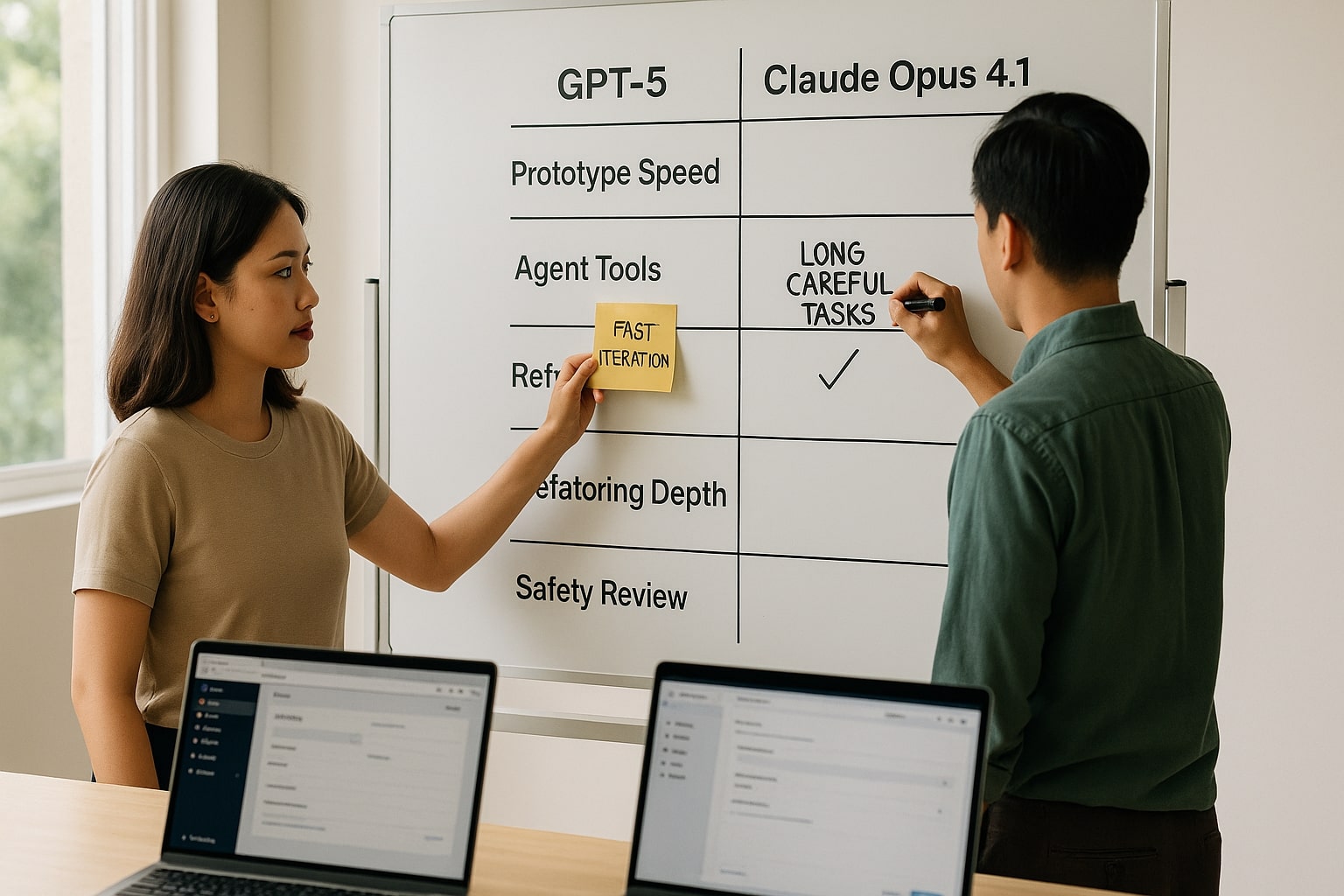

Practical split, GPT-5 for fast tool-rich iterations, Opus 4.1 for long careful work with steady behavior

Who should pick which model, a practical split for builders and marketers

If you are a startup engineer or product designer who ships many prototypes and leans on agentic workflows, GPT-5 is the default, the minimal reasoning mode and token efficiency help you iterate quickly, the tool use ergonomics cover most of the friction that slowed previous models. [2][3]

If you are a backend refactoring team or research analyst with long sessions that must trace details across many files and pages, Claude Opus 4.1 remains a precision choice, especially when combined with prompt caching and batch flows that amortize cost and reduce churn. [6][4]

If you are a performance marketer balancing cost and velocity, and you rely on frequent creative iterations, fast routing to tools, and clean summaries for reporting, GPT-5 will likely lift throughput. If your tasks skew toward careful copy audits, compliance checks, and long analytical reviews of product feedback, Opus 4.1 will feel calm and predictable. [2][4]

Claude Opus 4.1 vs GPT-5, feature and pricing comparison table

| Category | GPT-5 | Claude Opus 4.1 | What it means |

|---|---|---|---|

| Reasoning and tools | Strong agentic tool calling, fewer tool calls and tokens at similar quality, controls for reasoning effort and verbosity [2] | Strong agentic performance upgrades, documented tool token overhead for planning [6] | GPT-5 feels proactive for multi tool tasks, Opus offers predictable planning with explicit token accounting |

| Coding benchmarks | SWE-bench Verified 74.9 percent, Aider polyglot 88 percent [2] | SWE-bench Verified 74.5 percent [4] | Both are top tier, GPT-5 holds a slim lead on reported coding evals |

| Pricing, API | $1.25 in, $10 out per MTok, mini and nano tiers cheaper, Batch API halves cost [3] | $15 in, $75 out per MTok, Batch half price, prompt caching discounts [6] | GPT-5 is the cost leader for most workloads |

| Context window | Family supports long context, official docs focus on speed and tool use, check your endpoint for limits [2] | 200K tokens on Opus 4.1, 1M preview sits with Sonnet line, not Opus [6][5] | Opus handles large but not extreme contexts, plan Sonnet if you truly need 1M |

| Safety posture | System card highlights factuality and reduced deception under reasoning [1] | Option to end abusive chats shows conservative guardrails [11] | Buyers in regulated teams can align policy and product choices |

| Availability | API, ChatGPT, Azure AI Foundry, Microsoft surfaces at launch [2] | API, plus Amazon Bedrock and Google Vertex endpoints [4] |

Field notes that translate benchmarks into daily decisions for tech, marketing, and business teams

Field notes for our audience, tech, marketing, business

Start with the job, not the model. If your sprint needs code and content in the same afternoon, with many tool calls across repos, dashboards, and sheets, GPT-5 will compress the cycle and keep cost predictable. If you are reviewing sensitive copy, refactoring a brittle service, or combing through long research with many citations, Opus 4.1 rewards patience with steady output and fewer surprises. In practice, many teams will keep both and route by task, since the price gap is significant and the behavioral differences are useful. [2][4][3][6]

A note on energy and operational transparency

The 2025 conversation around model energy use grew louder. Reporting and commentary questioned the power draw of large reasoning runs and called for clearer disclosures. Buyers who manage sustainability goals should expect more transparency from vendors, and should budget for the real world cost of long agentic sessions. This is not a reason to avoid the tools, it is a reason to plan usage and governance with the same care you give to spend. [1]

The last word before you choose

Pick GPT-5 when you want an assertive collaborator that plans, calls tools, and finishes work quickly with friendly pricing, pick Claude Opus 4.1 when you want meticulous behavior on long, careful tasks with strong safety defaults, then measure both against your own harness and logs, since your tokens, your tools, and your latency are unique. Have thoughts, different results, or a niche workflow that flips the answer, drop a comment, tell us what you saw, and what surprised you.

References

- OpenAI — Introducing GPT-5 ↩

- OpenAI — Introducing GPT-5 for developers ↩

- OpenAI — API pricing for GPT-5 family ↩

- Anthropic — Claude Opus 4.1 announcement ↩

- Anthropic — Claude Opus 4.1 product page ↩

- Anthropic — Claude pricing, batch and caching ↩

- SWE-bench — official benchmark site and guidance ↩

- Meta Engineering — Andromeda retrieval engine ↩

- Meta Business — AI innovation in ads ranking, performance notes ↩

- Business Insider — inference whales and subscription economics ↩

- The Verge — Claude can end persistently harmful chats ↩

- The Guardian — Debate around Anthropic safety choices ↩