Table of Contents

- What the ElevenLabs Voice Cloning Consent Policy Really Requires in 2025

- The Legal Context Around the ElevenLabs Voice Cloning Consent Policy

- Why Consent Is the Ethical Spine of ElevenLabs Voice Cloning

- How the Product Operationalizes the ElevenLabs Voice Cloning Consent Policy

- Pricing and Plan Fit for Consent-Centric Workflows in ElevenLabs

- A Practical Playbook To Apply the ElevenLabs Voice Cloning Consent Policy

- Scenarios That Clarify the ElevenLabs Voice Cloning Consent Policy

- Governance, Audit, and a Consent Kit You Can Use

- Voice Cloning Consent Compliance Kit 2025

- Where the ElevenLabs Voice Cloning Consent Policy Goes Next

Margabagus.com – Global adoption of synthetic voices surged across media, customer support, and creator workflows, while enforcement caught up with a patchwork of rules and platform policies. At the center sits the ElevenLabs Voice Cloning Consent Policy, a living set of restrictions and verification steps designed to keep production uses inside clear consent lines, and to deter impersonation and election abuse through product level guardrails. The policy forbids unauthorized or deceptive cloning, requires users to confirm they hold the right and consent to clone a voice, and connects to detection and reporting tools that trace questionable audio back to the generating account [1][8].

In 2025, regulation matured. The EU AI Act entered into force with phased obligations for deepfake transparency and governance, while the United States advanced right of publicity and digital replica laws led by Tennessee and California. These milestones shape how teams capture consent, label outputs, and audit generations from ideation to release [6][7][9].

The interface reinforces the requirement to hold rights and consent before cloning.

What the ElevenLabs Voice Cloning Consent Policy Really Requires in 2025

The ElevenLabs Voice Cloning Consent Policy is anchored in a prohibited use rule set that bans unauthorized or harmful impersonation and bans deceptive content that hides its synthetic nature. Users must attest they have the right and consent to clone the target voice before a clone can be saved. The platform states it can trace generated audio to the responsible account, and it investigates reported infringements, which adds accountability to public productions [1][8].

Practically, consent flows appear in product surfaces. During instant cloning, the interface asks for voice details and requires confirmation that you hold the right and consent to clone. ElevenLabs documentation and privacy language further explain that they can process a verification clip from the voice owner to confirm identity and permission when a third party will use that voice [3][2].

The safety model extends beyond a checkbox. ElevenLabs maintains a public AI Speech Classifier that helps determine if an audio sample likely originated from their system, and it operates no-go voices safeguards that detect and block the creation of sensitive political voice clones, particularly around election cycles [4][5].

What this means for teams

- Treat consent as a documented license, not a general acknowledgment, with scope, duration, and revocation clauses.

- Capture a fresh verification sample from the talent for identity matching, then link that proof to the voice asset ID.

- Run classifier checks during QA, add provenance labels where required, and keep an incident playbook ready.

The Legal Context Around the ElevenLabs Voice Cloning Consent Policy

The EU AI Act sets a multi date timeline. Prohibitions and AI literacy duties applied in February 2025, governance rules and obligations for foundation models applied in August 2025, and full applicability lands in 2026, with extended time for certain embedded high risk systems. Synthetic media transparency, including deepfake labeling, becomes a default expectation in content supply chains that publish to EU audiences [6].

In the United States, state laws built guardrails for voice cloning. Tennessee’s ELVIS Act expanded protection of name, image, likeness, and voice with civil and criminal remedies, and took effect in July 2024. This law targets unauthorized AI impersonation of artists and sets a reference point for other states considering similar voice protections [7].

California advanced digital replica bills that strengthen informed consent for performers and address post mortem rights. AB 2602 applies from January 2025, while AB 1836 covers deceased performers and takes effect in 2026, which means long running catalogs and estates need clear approvals before any synthetic new works deploy to the market [9].

Regulators also moved through policy levers and public challenges. The United States FTC elevated voice cloning harms to a national priority area through its Voice Cloning Challenge and guidance, which signals growing scrutiny of unconsented impersonation in fraud and robocalls [10].

Ethical production preserves audience trust and protects talent.

Why Consent Is the Ethical Spine of ElevenLabs Voice Cloning

Consent is not just a legal requirement, it is the trust contract between a creator and an audience. Production teams work with living performers, estates, or customers who rely on assistive speech. Respecting consent preserves dignity for the talent, and it preserves credibility for the brand that publishes synthetic audio.

Real incidents demonstrate stakes for people whose voices are cloned without permission. Newsrooms documented political robocalls and unauthorized uses that created confusion and reputational harm, which shows why consent checks and labeling are not optional in modern audio pipelines [11].

Ethics translate into product choices. Use verified voices for character work, localization, and accessibility, and mark outputs as AI generated where laws or platforms expect that transparency. Avoid satire that could be mistaken as real speech by affected communities, and never create a deepfake of an individual for shock value.

Check out this fascinating article: How ElevenLabs Performs in Practice: A Deep Dive into Brand Voice, Quality, Licensing, and Pricing

How the Product Operationalizes the ElevenLabs Voice Cloning Consent Policy

ElevenLabs publishes a Prohibited Use Policy, a Safety hub with reporting, and the public AI Speech Classifier. The company introduced no-go voices to protect elections by blocking creation of voices that mimic active candidates in some jurisdictions, and it has publicly described that work during election years. These are not perfect shields, yet they raise the cost of misuse and provide a reporting pipeline that links audio to a responsible account [1][4][5].

The platform’s privacy statement adds an important verification lever. When someone other than the owner plans to use the voice, ElevenLabs may process an audio recording from the owner to verify that the voice is theirs and that they consent, which aligns product behavior with the stated policy [2].

Teams should combine these platform features with internal controls. Maintain a central register of voice assets, consent files, and scope metadata. Map each campaign to a voice ID and keep a quick takedown route open in case an asset is misused by third parties on social platforms.

Choose capacity and features that match your consent governance.

Pricing and Plan Fit for Consent-Centric Workflows in ElevenLabs

Price affects how fast your team can scale generation under a consent framework. ElevenLabs offers free and paid plans for studio use and API use. Starter and Creator appeal to individual creators who need instant cloning and a small number of commercial uses each month. Pro and Scale unlock higher capacity, higher quality audio output, and additional API features that matter for large content calendars and dubbing pipelines [12].

Below is a concise comparison focused on capacity and the cloning context. You should confirm current allowances on the official pricing page, since plan details can change over time and enterprise contracts often include custom terms [12][13].

| Plan | Monthly price | Included credits, indicative | Voice cloning access | Audio output quality | API access |

|---|---|---|---|---|---|

| Free | $0 | 10k credits | No professional clone | Standard | Limited |

| Starter | $5 | ~30k credits | Instant clone | Standard | Studio and basic API |

| Creator | $11 | ~100k credits | Professional clone available, with consent | Higher quality | Usage based overages |

| Pro | $99 | ~500k credits | Professional clone available, with consent | Highest quality options | Advanced API, higher concurrency |

| Scale | $330 and up | 2M credits and seats | Professional clone, team seats | High quality, team features | Team features and priority support |

Pricing note

Credits translate to minutes of audio depending on the model and bitrate. API pages provide concrete minute equivalents, which helps forecast budgets for dubbing and agents [13].

A simple sequence keeps teams aligned from brief to release.

A Practical Playbook To Apply the ElevenLabs Voice Cloning Consent Policy

This section gives you a complete, hands on sequence that legal, product, and content teams can run today. It is designed for tech and business readers who want a repeatable workflow.

1) Collect explicit, informed consent

Use a plain language consent template that covers scope, media, duration, revocation, sublicensing, territories, and compensation. Add a clause for provenance labeling in the EU and for future regulatory changes. Keep a consent file URL that can be referenced in audits. The FTC has signaled sustained interest in voice cloning harms, which means consent records are part of your risk posture [10].

2) Verify identity and link to a voice ID

Capture a fresh verification clip recorded by the owner, then store a checksum and a match report. ElevenLabs supports a verification step when a third party will use someone’s voice, which aligns with this control [2].

3) Configure product safeguards

Use the Personal tab for owned voices, confirm that you have the right and consent to clone, and save the voice. For sensitive scenarios, test samples through the AI Speech Classifier and keep reports with your release notes [3][4].

4) Label synthetic media where required

The EU AI Act phases in obligations related to transparency and governance. Apply clear labels in captions, show notes, and track assets with a metadata field for provenance, then update when obligations expand in 2026 [6].

5) Enforce a red flag list

Adopt the spirit of no-go voices for internal rules around politicians, minors, deceased individuals without estate approval, and protected professions. ElevenLabs operates its own guardrail for political candidates; you should match or exceed it to reduce abuse risk during election periods [5].

6) Run QA and maintain an audit trail

Before publication, run random samples through the classifier, validate that scripts and use cases match consent scope, and log release approvals. Archive voice assets and consent files with restricted access.

7) Prepare for incidents

Create a takedown protocol with a response contact, a link to report content to the platform, and a comms template. Newsrooms and researchers have shown how fast synthetic audio can spread during election windows, which calls for faster response than normal brand incidents [11].

Scenarios That Clarify the ElevenLabs Voice Cloning Consent Policy

This short set clarifies gray areas you might hit in production.

- Localizing a founder keynote

You hold the founder’s consent for company marketing, which covers translation to Spanish and Japanese. You label the podcast description with an AI disclosure for EU listeners and archive the classifier report. This matches policy and emerging regulations [6]. - Narration with the voice of a public figure

You want a celebrity voice. Without written permission from the person or their rights holder, you must not proceed. The prohibited use policy bars unauthorized impersonation, and state laws introduce additional liability for voice replicas [1][7]. - Estate approved archival voice

You plan a documentary that recreates a deceased performer’s voice. California’s AB 1836 covers digital replicas of deceased performers and requires consent from the estate. Obtain written approval and log scope before production [9].

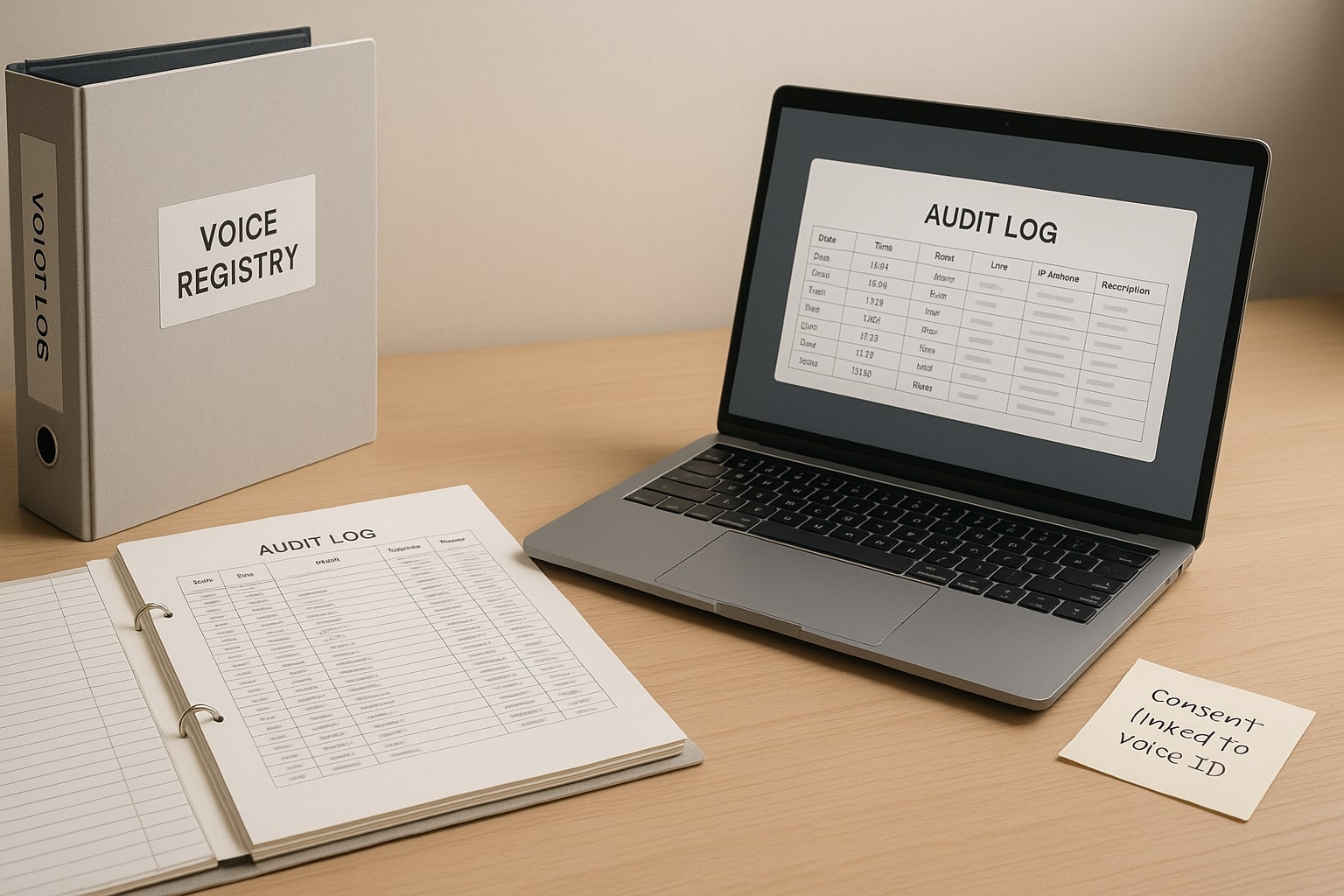

Central records make labeling and incident response fast.

Governance, Audit, and a Consent Kit You Can Use

Strong governance connects legal intent with real time product settings and publishing habits. Your team should maintain a single source of truth that maps voice IDs to consent documents, release approvals, and downstream channels. As a starting point, use the downloadable workbook below, then adapt it to your stack.

Voice Cloning Consent Compliance Kit 2025

Toolkit praktis berisi checklist consent, risk matrix, audit log template, dan tracker regulasi lintas yurisdiksi, cocok untuk legal, PM, dan content ops.

Where the ElevenLabs Voice Cloning Consent Policy Goes Next

Funding and product velocity suggest synthetic speech will move deeper into everyday applications, which puts more pressure on consent workflows and provenance labeling across regions. ElevenLabs expanded its product and funding in early 2025, and industry peers delayed or restricted general release tools when misuse risks spiked. You should expect more classifiers, tighter account traceability, and heavier emphasis on labels in the coming year [14][15].

As teams look ahead to the next release cycle, the smartest path blends product guardrails, explicit consent, auditable records, and clear public disclosures. That combination allows you to ship at the pace of creators, while respecting the people whose voices make your stories possible. If you have questions or experiences to share, leave a comment so we can learn together and refine this playbook.

References

- ElevenLabs — Prohibited Use Policy ↩

- ElevenLabs — Privacy Policy ↩

- ElevenLabs Docs — Instant Voice Cloning ↩

- ElevenLabs — AI Speech Classifier ↩

- ElevenLabs Help — No-Go Voices ↩

- European Commission — EU AI Act timeline ↩

- Latham & Watkins — Tennessee ELVIS Act analysis ↩

- ElevenLabs Help — Restrictions and tracing ↩

- Davis+Gilbert — California AB 1836 and AB 2602 ↩

- FTC — Voice Cloning Challenge ↩

- WIRED — Analysis of Biden robocall and cloning ↩

- ElevenLabs — Pricing overview ↩

- ElevenLabs — API pricing and minutes mapping ↩

- Reuters — ElevenLabs funding and roadmap ↩

- The Guardian — OpenAI voice engine consent stance ↩