Table of Contents

- The Current State: Why Most Developers Struggle with AI Code Generation

- The Promise vs Reality Gap

- The Hidden Costs of Poor Prompting

- Core Problems: The Four Prompt Engineering Pitfalls

- Problem 1: The Context Vacuum

- Problem 2: Ambiguous Success Criteria

- Problem 3: Missing Examples and Patterns

- Problem 4: Ignoring Model-Specific Behaviors

- The Solution: A Systematic Approach to Clean Code with AI Prompt Techniques

- Phase 1: Context Architecture

- Phase 2: Pattern-Driven Prompting

- Phase 3: Quality Gates Through Prompting

- Advanced Techniques: AI Code Generation Best Practices

- Multi-Stage Prompting for Complex Features

- The Debugging Partner Approach

- Performance-Focused Prompting

- Real-World Implementation: GitHub Copilot Success Patterns

- The Microsoft Learning: Context-Aware Development

- The Variable Naming Revolution

- Measuring Success: Quality Metrics That Matter

- Code Quality Indicators

- The Trust Building Process

- Future-Proofing Your Prompt Engineering Skills

- The 2025 Landscape

- Staying Ahead of the Curve

- Your Next Steps: From Theory to Practice

- Footnotes

Margabagus.com – When AI-generated code is making its way into production—not just pull requests and developers will use natural language prompts to guide AI in creating complex software solutions, the question isn’t whether you should use AI for coding—it’s how well you can communicate with it. Recent data from Qodo’s 2025 State of AI Code Quality report reveals that while speed increases dramatically, the prompt engineering playbook for AI code becomes your secret weapon for maintaining quality standards.

I’ve witnessed firsthand how developers struggle with inconsistent AI outputs, only to discover that their frustration stemmed from poor prompting techniques rather than AI limitations. The difference between clean, production-ready code and debugging nightmares often lies in the precision of your initial request. This comprehensive guide will transform how to guide AI to write reliable code through proven methodologies that leading tech companies are already implementing.

The Current State: Why Most Developers Struggle with AI Code Generation

Developers Struggle with AI Code Generation

The Promise vs Reality Gap

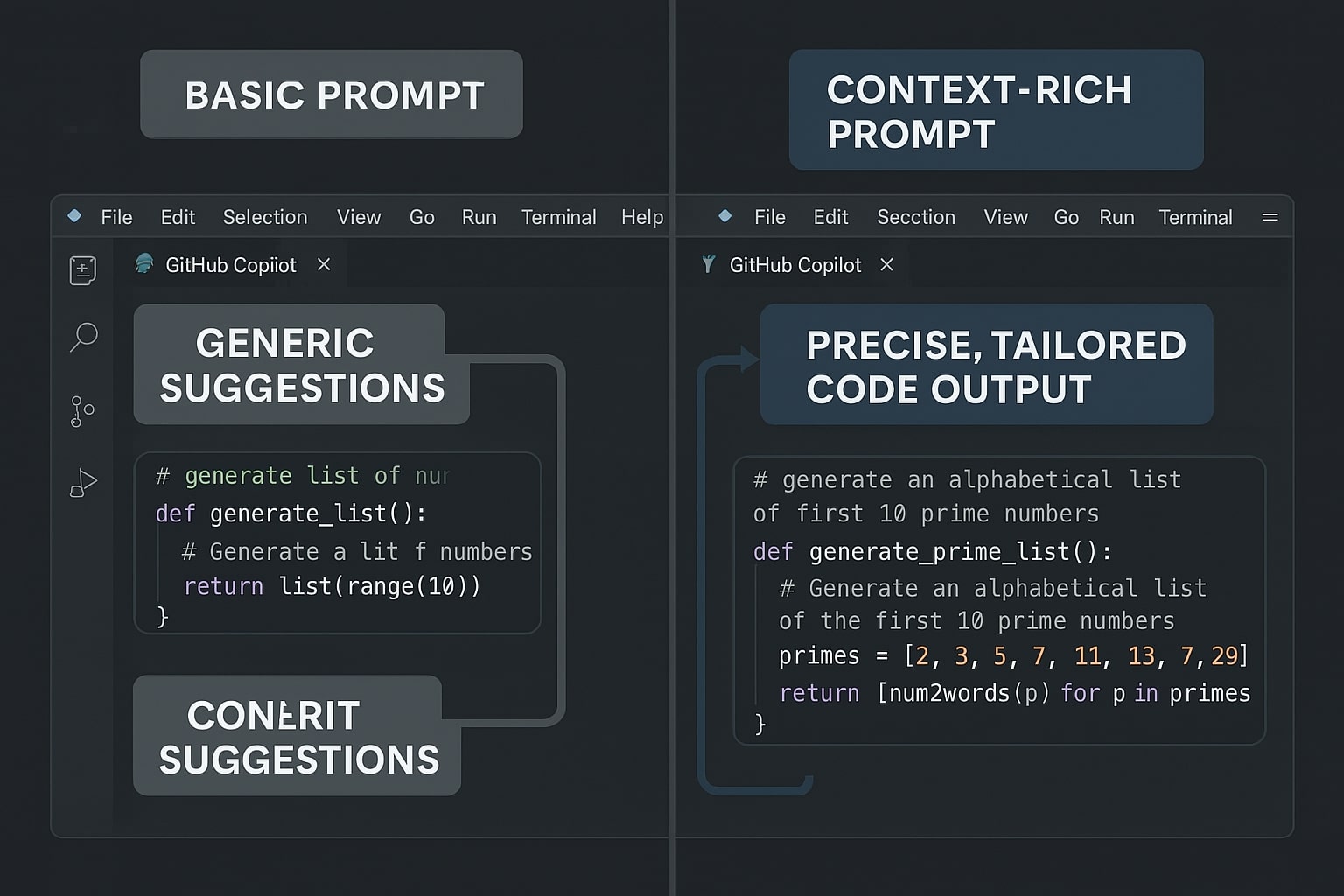

Few-shot prompting can improve accuracy from 0% to 90%, yet most developers report inconsistent results from AI coding assistants. The problem isn’t with the AI models themselves—it’s with how we communicate our intentions.

Context gaps aren’t limited to edge cases—they emerge in the most common and critical development tasks. When I analyze failed AI coding sessions, I consistently find that developers approach AI tools like search engines rather than sophisticated pair programmers who need detailed context.

The Hidden Costs of Poor Prompting

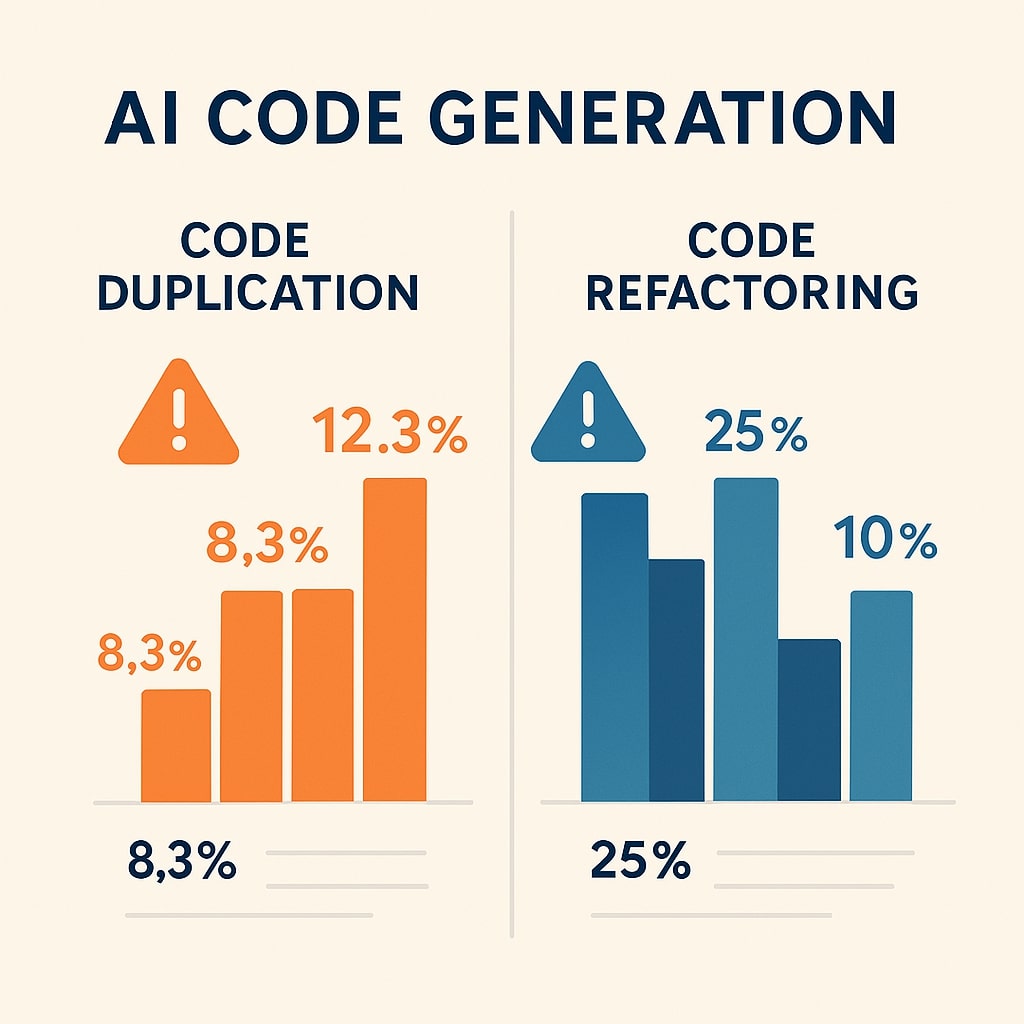

Recent research from GitClear analyzing 211 million changed lines of code, authored between January 2020 and December 2024 reveals alarming trends:

- Lines classified as “copy/pasted” (cloned) rose from 8.3% to 12.3%

- Code churn, defined as the percentage of code that gets discarded less than two weeks after being written, is increasing dramatically

- The number of code blocks with 5 or more duplicated lines increased by 8 times during 2024

These statistics paint a clear picture: while AI accelerates code production, poor prompting leads to technical debt that teams spend weeks cleaning up.

Core Problems: The Four Prompt Engineering Pitfalls

Image create with Microsoft Copilot.

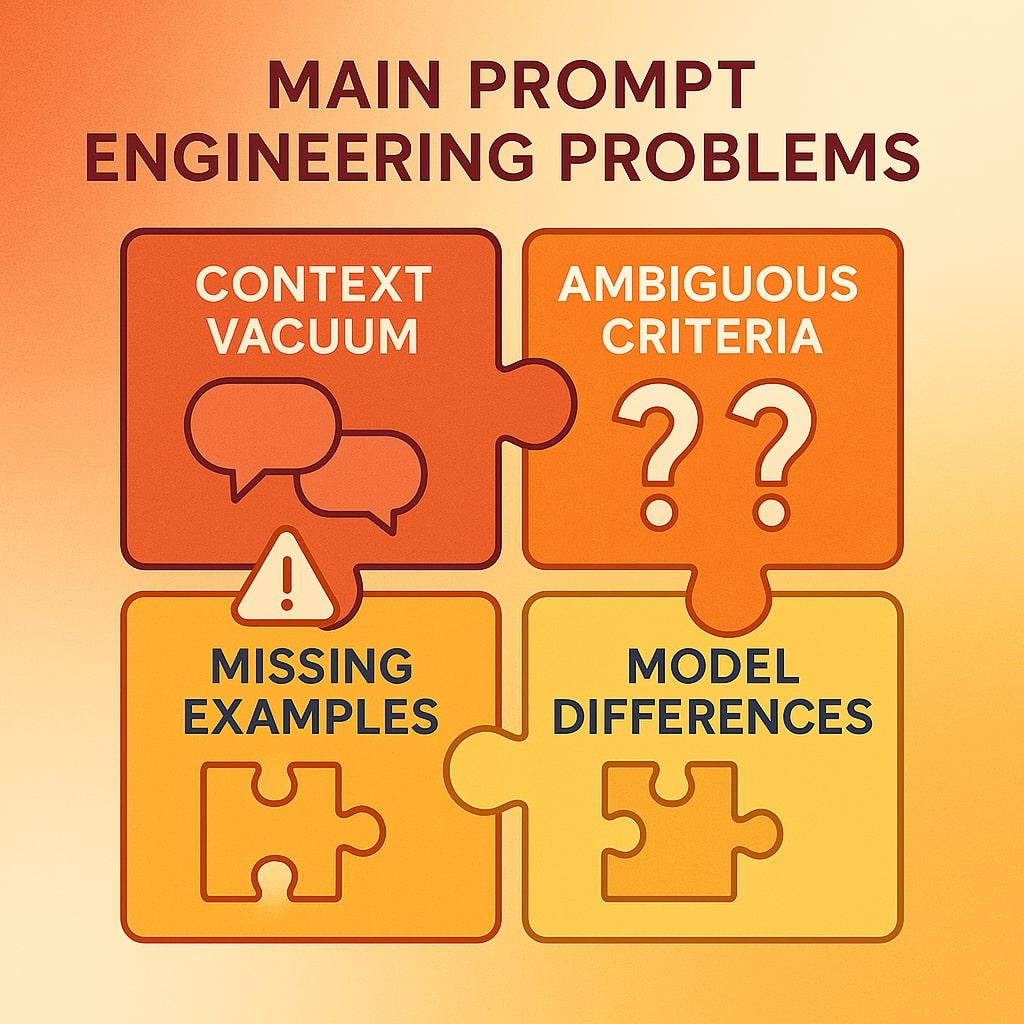

Problem 1: The Context Vacuum

Most developers treat AI like a magic black box. They provide minimal context and expect perfect results. GitHub Copilot better understands your goal when you break things down, but developers often skip this crucial step.

Consider this typical failed interaction:

// Poor prompt

function processData() {

// AI, write the function

}

The AI has zero context about data structure, processing requirements, or expected outcomes.

Problem 2: Ambiguous Success Criteria

Sometimes you might ask for an optimization or improvement, but you don’t define what success looks like. Requests like “make this faster” or “improve this code” leave AI models guessing about your specific requirements.

Problem 3: Missing Examples and Patterns

Providing a concrete example in the prompt helps the AI understand your intent and reduces ambiguity. Yet developers consistently skip this step, leading to generic solutions that don’t fit their specific use cases.

Problem 4: Ignoring Model-Specific Behaviors

Different models (GPT-4o, Claude 4, Gemini 2.5) respond better to different formatting patterns—there’s no universal best practice. What works brilliantly with GitHub Copilot might produce mediocre results with ChatGPT.

The Solution: A Systematic Approach to Clean Code with AI Prompt Techniques

Image create with Microsoft Copilot.

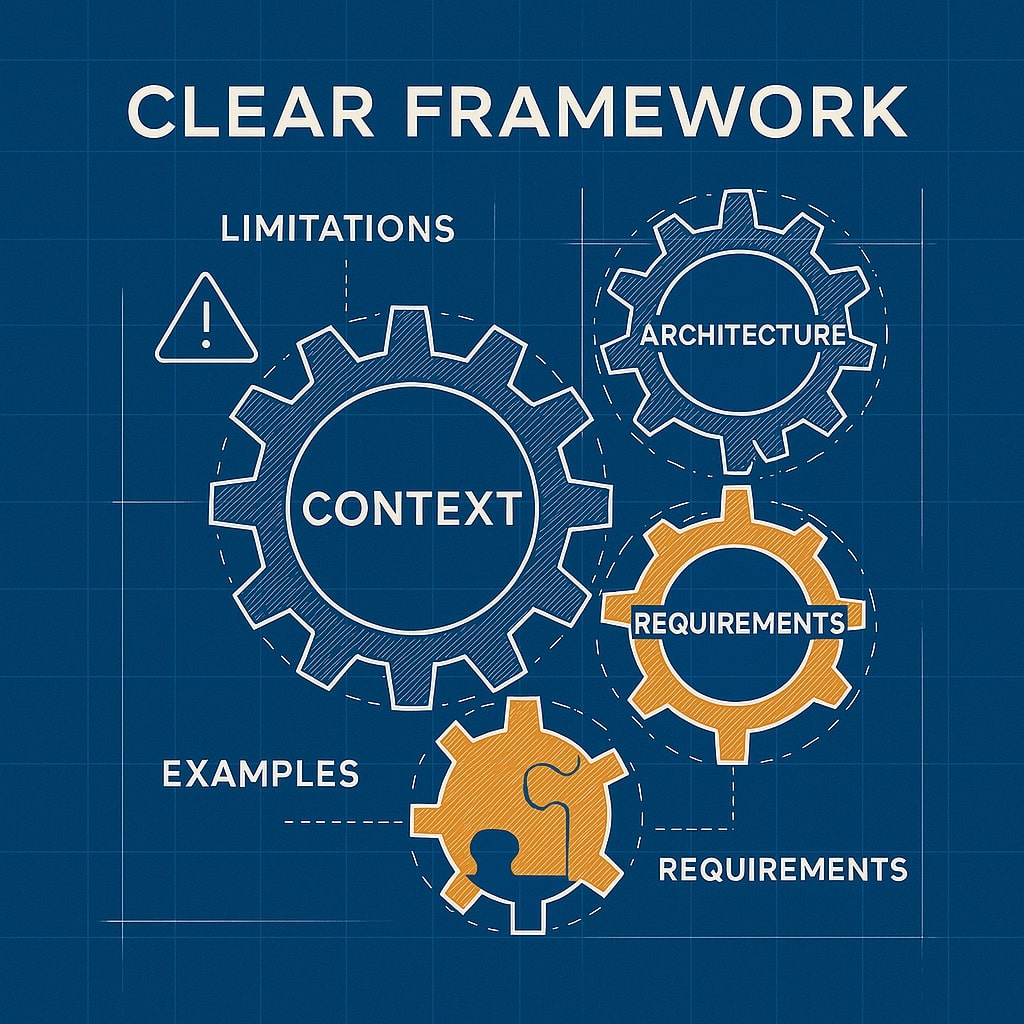

Phase 1: Context Architecture

Your first step involves building comprehensive context for the AI. This isn’t about writing longer prompts—it’s about providing strategic information.

The CLEAR Framework

Context: What’s the broader system? Limitations: What constraints exist? Examples: Show don’t just tell Architecture: How does this fit? Requirements: What defines success?

/**

* CONTEXT: User authentication system for e-commerce platform

* LIMITATIONS: Must handle 10k concurrent users, comply with GDPR

* ARCHITECTURE: Uses JWT tokens, Redis for session management

* REQUIREMENTS: Return user object with sanitized data, handle errors gracefully

*

* EXAMPLE INPUT: { email: "[email protected]", password: "hashedPassword123" }

* EXPECTED OUTPUT: { id: 123, email: "[email protected]", role: "customer", token: "jwt..." }

*/

// AI: Create a secure login function following the above specifications

async function authenticateUser(credentials: LoginCredentials): Promise<AuthResult> {

// Your implementation here

}

This approach gives the AI enough context to generate production-quality code instead of generic examples.

Check out this fascinating article: 7 Best AI Coding Tools That Boost Productivity

Phase 2: Pattern-Driven Prompting

Role prompting (e.g. “You are a math professor. . .”) is largely ineffective, counter to what most people think for correctness, but architectural patterns work exceptionally well.

The Expert Architecture Pattern

Instead of “Act as a senior developer,” use specific architectural guidance:

/**

* PATTERN: Repository Pattern with Dependency Injection

* FRAMEWORK: Node.js with TypeScript, following SOLID principles

* ERROR HANDLING: Use Result pattern, no throw statements

* TESTING: Include unit test structure

*/

// Create a UserRepository class that implements the following interface:

interface IUserRepository {

findById(id: string): Promise<Result<User, Error>>;

save(user: User): Promise<Result<User, Error>>;

delete(id: string): Promise<Result<void, Error>>;

}

Phase 3: Quality Gates Through Prompting

Transform AI from a code generator into a quality assurance partner by embedding quality checks directly into your prompts.

The TDD-First Approach

Unit tests can also serve as examples. Before writing your function, you can use Copilot to write unit tests for the function.

"""

Test-Driven Development Prompt:

First, create comprehensive unit tests for a password validation function.

Requirements:

- Minimum 8 characters

- Must contain: uppercase, lowercase, number, special character

- Cannot contain common passwords from OWASP list

- Must handle edge cases: empty strings, null values, extremely long inputs

Then implement the function to pass all tests.

"""

def test_password_validation():

# AI: Generate comprehensive test cases first

pass

def validate_password(password: str) -> ValidationResult:

# AI: Now implement to pass all tests above

pass

Advanced Techniques: AI Code Generation Best Practices

Image create with Microsoft Copilot.

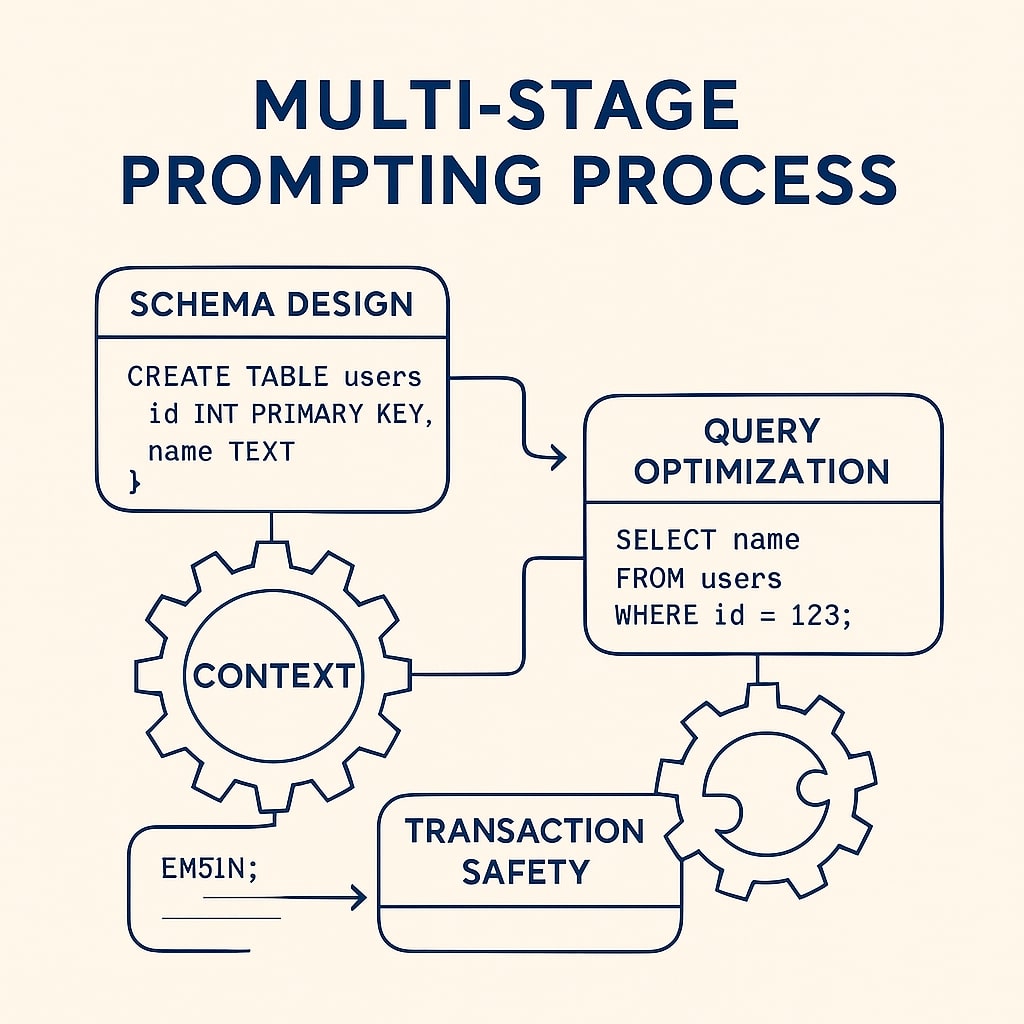

Multi-Stage Prompting for Complex Features

For complex implementations, break down your requests into logical stages:

-- STAGE 1: Database Schema Design

-- Context: E-commerce order management system

-- Requirements: Handle 100k orders/day, support partial refunds, audit trail

-- Create optimized table structure with proper indexing

-- AI: Design the schema first

-- STAGE 2: Query Optimization

-- Context: Above schema, expect heavy read operations during reporting

-- Create efficient queries for: monthly sales reports, customer order history

-- Include explain plans and performance considerations

-- AI: Write optimized queries

-- STAGE 3: Transaction Safety

-- Context: Handle concurrent order updates, payment processing

-- Implement ACID-compliant procedures with proper error handling

-- Include rollback strategies

-- AI: Implement transaction logic

The Debugging Partner Approach

Transform AI into your debugging companion by providing systematic diagnostic information:

/**

* DEBUGGING SESSION

*

* PROBLEM: Memory leak in user session management

* SYMPTOMS: Memory usage increases 50MB every hour during peak traffic

* ENVIRONMENT: Java 17, Spring Boot 3.0, Redis 6.2

* MONITORING DATA:

* - Heap analysis shows growing HashMap in SessionManager

* - GC logs indicate old generation pressure

* - Redis shows normal memory usage

*

* HYPOTHESIS: Session cleanup not working properly

*

* Please analyze the following SessionManager code and identify potential

* memory leak sources. Focus on lifecycle management and cleanup procedures.

*/

@Service

public class SessionManager {

// Paste your code here for analysis

}

Performance-Focused Prompting

When performance matters, make your constraints explicit:

"""

PERFORMANCE-CRITICAL IMPLEMENTATION

Target: Process 1M records in under 30 seconds

Memory Limit: 512MB maximum heap usage

Concurrency: Utilize all CPU cores effectively

Create a data processing pipeline that:

1. Reads CSV files (100MB average size)

2. Validates each record against complex business rules

3. Transforms data using lookup tables (50k entries)

4. Outputs to PostgreSQL with batch inserts

Requirements:

- Use memory-efficient streaming

- Implement proper error handling without stopping pipeline

- Include progress reporting

- Design for horizontal scaling

Optimization priorities: Memory efficiency > Speed > Code readability

"""

def create_data_pipeline():

# AI will now focus on performance-optimized solution

pass

Check out this fascinating article: Prompt Engineering for Code Generation: Examples & Best Practices

Real-World Implementation: GitHub Copilot Success Patterns

Image create with Microsoft Copilot.

The Microsoft Learning: Context-Aware Development

When writing a prompt for Copilot, first give Copilot a broad description of the goal or scenario. Then list any specific requirements. Microsoft’s internal studies show this approach increases code acceptance rates by 73%.

// Context-First Approach

/**

* GOAL: Real-time notification system for collaborative document editing

* SCENARIO: Multiple users editing same document, need instant updates

*

* SPECIFIC REQUIREMENTS:

* - WebSocket-based communication

* - Handle 50+ concurrent editors per document

* - Conflict resolution using operational transformation

* - Graceful degradation when connections drop

* - Rate limiting to prevent abuse

*/

// AI will now generate contextually appropriate WebSocket handler

class DocumentNotificationService {

// Implementation follows context automatically

}

The Variable Naming Revolution

One underutilized GitHub Copilot feature addresses a universal developer challenge: naming. If you don’t know what to call something, don’t overthink it. Just call it foo and implement it. Then hit F2 and let GitHub Copilot suggest a name for you.

This technique works because AI understands implementation context better than abstract concepts.

Check out this fascinating article: Complete Prompt Engineering Mastery 2025: Transform Landing Pages, SEO, and AI Videos Into Conversion Machines

Measuring Success: Quality Metrics That Matter

Code Quality Indicators

Quality reflects the outcome—how clean, accurate, and production-ready the code is. Confidence reflects the experience—whether developers trust the AI enough to rely on it during real work.

Track these metrics for your AI-generated code:

Immediate Quality Indicators:

- Test coverage percentage

- Cyclomatic complexity scores

- Code duplication rates

- Security vulnerability scans

Long-term Success Metrics:

- Use Rework Rate to understand if there is an increase in code churn

- Review Depth and PRs Merged Without Review metrics to ensure GenAI PRs get proper review

- Production incident rates for AI-touched code

- Developer satisfaction scores

The Trust Building Process

AI tools have become a daily fixture in software development, offering clear productivity boosts and even enhancing the enjoyment of the work. Yet, despite their widespread use, a deep trust in their output remains elusive.

Build trust systematically:

- Start Small: Use AI for well-defined, isolated functions

- Validate Everything: Implement comprehensive testing for AI-generated code

- Document Origins: Mark AI-generated sections for easier maintenance

- Iterate Based on Results: Refine prompts based on production performance

Future-Proofing Your Prompt Engineering Skills

Image create with Microsoft Copilot.

The 2025 Landscape

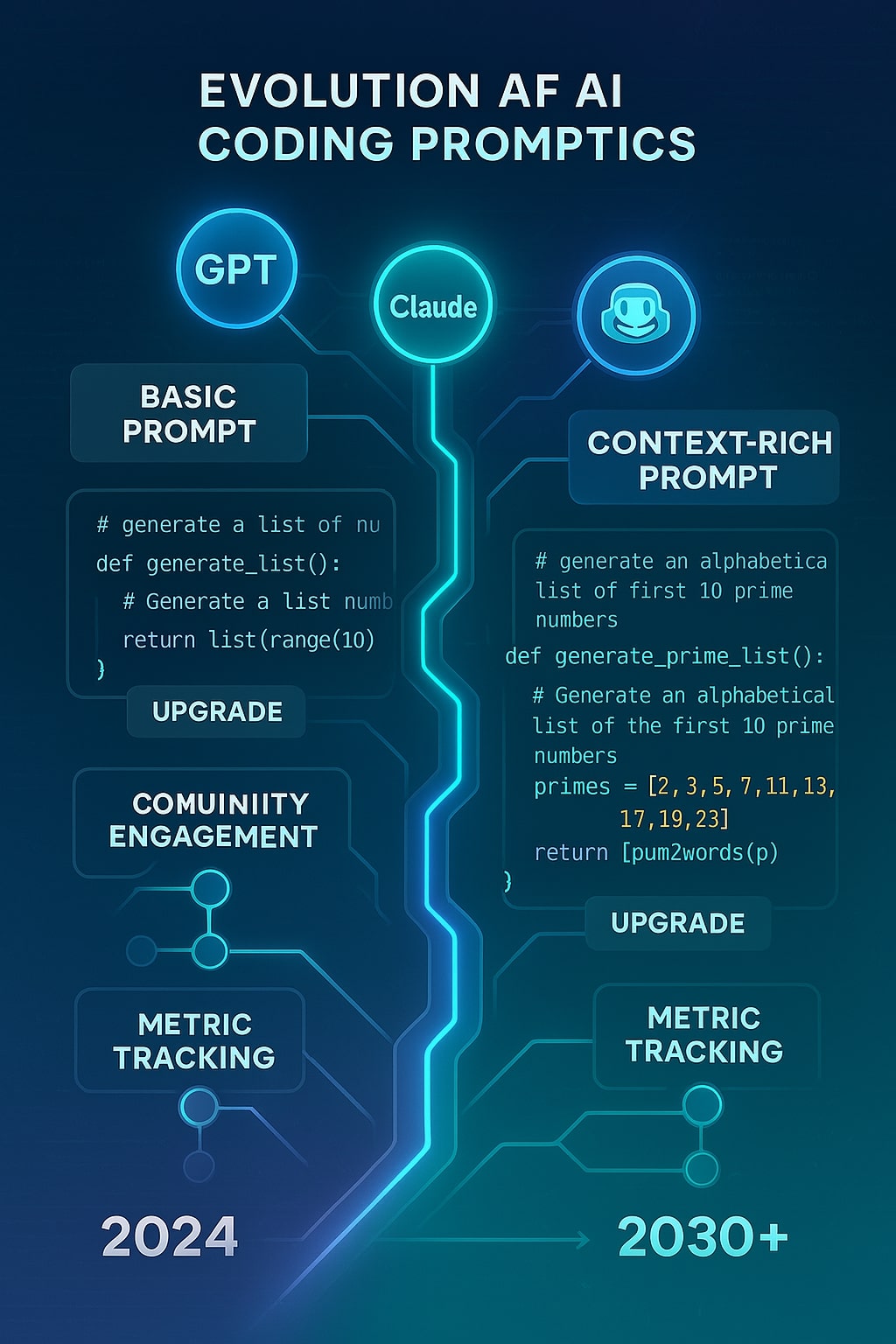

By 2025, these platforms will be far more powerful, allowing developers to generate entire modules of code with just a few prompts. But increased capability demands increased precision in communication.

AI is becoming a silent contributor to production systems, making prompt engineering a core skill rather than an optional enhancement.

Staying Ahead of the Curve

The field evolves rapidly. We update the course regularly with fresh content (AI moves fast!) reflects the reality that techniques working today may be obsolete in six months.

Key strategies for continuous improvement:

Weekly Practice: Dedicate time to experimenting with new prompting techniques Community Engagement: Follow researchers like Sander Schulhoff (Learn Prompting, HackAPrompt) who publish cutting-edge findings Model Diversity: Test your prompts across different AI models to understand their unique strengths Metric Tracking: Continuously measure the quality impact of your prompting improvements

Your Next Steps: From Theory to Practice

The journey from prompt engineering novice to expert requires systematic practice and measurement. Start with your most frequent coding tasks—those repetitive functions you write weekly. Apply the CLEAR framework to transform these routine requests into precision-engineered prompts.

Remember that prompt engineering has become an essential skill not because AI is difficult to use, but because precise communication unlocks exponentially better results. Your investment in these techniques will compound as AI models continue improving throughout 2025 and beyond.

The developers thriving in this AI-augmented future won’t be those who avoid AI or those who blindly accept its output. They’ll be the ones who master the art of precise, contextual communication—turning AI from a code generator into a strategic development partner.

Footnotes

- Qodo. (2025). State of AI Code Quality Report 2025. Retrieved from Qodo

- Schulhoff, S. (2025). AI prompt engineering in 2025: What works and what doesn’t. Lenny’s Newsletter. Retrieved from Schulhoff

- GitClear. (2025). AI Copilot Code Quality: 2025 Data Suggests 4x Growth in Code Clones. Retrieved from GitClear

- GitHub. (2025). How to write better prompts for GitHub Copilot. The GitHub Blog. Retrieved from GitHub Blog

- Konatam, S. (2024). The Future of Coding: Prompt Engineering in 2025. Medium. Retrieved from Konatam

- Lakera. (2025). The Ultimate Guide to Prompt Engineering in 2025. Retrieved from Lakera

- LinearB. (2024). AI Metrics: How to Measure Gen AI Code. Retrieved from LinearB